The main content of the control file will be the checksums for blocks of data in the file to be downloaded. What checksums should be used, and how should they be transmitted? The choice of the rsync algorithm gives us part of the answer: a weak checksum, which can be calculated in a rolling manner over the data already held by the client, must be transmitted, to allow the client to easily identify blocks. Then a stronger checksum, strong enough to prevent blocks being identified as in common with the target file incorrectly, must be used for verification.

rsync transmits a 4-byte weak checksum, and I have used the same formula, but we could shorten the amount of data, to reduce the size of the control file. For small files, 4 bytes might be more than is needed to efficiently reject false matches (see, for example, [[CIS2004]]).

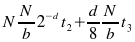

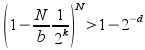

There is a tradeoff here between the download time and the processing time on the client. The download time is proportional to the amount of checksum data we transmit; part of the processing time on the client is proportional to the number of strong checksums that need to be calculated. The number of false matches on weak checksums (and hence unnecessary strong checksum calculations) are proportinal to the number of hashes calculated by the client (which is roughly the file size on the client) times the likelyhood of a false match. Adding this to the download time for the weak checksums, we have:

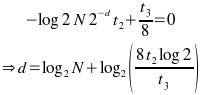

(where d is the number of bits of weak checksum transmitted per block, t2 is the time to calculate a strong checksum, and t3 is the download time for each weak checksum.) This is a simple minimisation problem, with minimum time given by:

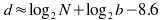

t3 is easy to know for any given client, but of course varies between clients - a client on a modem will view download data as more expensive, and so prefer a shorter weak checksum and more CPU time used on the client instead. Similarly t2 varies depending on the speed of the client computer; it also depends on the blocksize (OpenSSL on my computer can calculate the MD4 checksum of ~200MB per second - although this drops for very small blocksizes). The server will have to assume some reasonable default for both values. For 512kbps transfer speeds (standard ADSL) and a typical desktop computer able to calculate strong checksums at 200MB/s, we have (for large blocksizes, say >512 bytes):

For the moment, I have chosen to send a whole number of bytes; and it is better to err on the side of sending too many weak checksum bits than too few, as the client performance degrades rapidly if the weak checksum is too weak. For example, on a 600MB ISO file, a 3-byte weak checksum causes the client to take an extra minute of CPU time (4 minutes, against 3 minutes when 4 bytes of weak checksum were provided) in a test conducted with zsync-0.2.0 (pre-release). In practice, this means that 4 bytes of weak checksum is optimal in most cases, but for some smaller files 3 bytes is better. It may be worth tweaking the parameters of this calculation in specific circumstances, such as when most clients are fast computers on very slow network connections (desktop computers on modems), although in these circumstances the figures here will still be small relative to the total transfer size.

The strong checksum is a different problem. It must be sufficiently string such that, in combination with the weak checksum, there is no significant risk of a block being identified in common between the available local data and the target file when in practice the blocks differ. rsync uses an MD4 checksum of each block for this purpose.

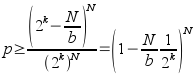

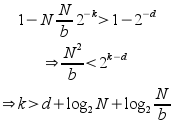

I have continued to use MD4 for the moment. There are probably alternatives which would be more efficient with CPU time, but this is not a scarce quantity for zsync. What is of interest is the amount of data that must be transmitted to the client: a full MD4 checksum requires 16 bytes. Given a file of length N, blocksize b, there are N/b blocks in a file; and assume that the client also has, for simplicity, N bytes of potential local data (and so ~N possible blocks of local data). If there is no data in common, and k bits of checksum transmitted, and the checksums are uniformly and independently distributed, then the chance of no collisions (data incorrectly believed to be in common, and ignoring the weak checksum for now):

(bound derived by assuming the worst case, N/b distinct hashes from the server; the hashes on the client must not include any of these from the server.) To derive a value for k, we want this probability to be arbitrarily close to 1; say for some d:

Approximating the LHS and manipulating:

To get a reasonable certainty of no false matches, say one in a million, we can have say d=20, so this formula then gives us an easy way to calculate how many bits of the strong checksum have to be included in the .zsync file. It can be rounded up to the nearest byte for convenience; but by keeping this value low, we reduce the size of the .zsync. This reduces the storage requirement on the server, and the total amount that the client must download.